Project Information

My role

Conversation designer

Context

'Design and Identity Studio' in college

Conversation designer

Context

'Design and Identity Studio' in college

Time Period

Over a period of 2 weeks, Feb 2021

Mentored by

Syed Gowhar Andrabi

Over a period of 2 weeks, Feb 2021

Mentored by

Syed Gowhar Andrabi

Inspiration for this problem

Source can be found here

Driving the car around, while having to change the music is something I’ve seen many millennials & Gen Z struggle with.

Whether its about looking at the road, while mentally focusing on the bottom right corner of your phone screen in your left hand, where your ‘Spotify’ app is located; Or guesstimating the location of the play button after opening the Spotify app which you confirmed from a whir of green and black for a micro second looking down at your phone.

Whether its about looking at the road, while mentally focusing on the bottom right corner of your phone screen in your left hand, where your ‘Spotify’ app is located; Or guesstimating the location of the play button after opening the Spotify app which you confirmed from a whir of green and black for a micro second looking down at your phone.

Problem Statement

How might we create a less distracting experience for drivers who make use of infotainment within cars?

Larger context for this problem

This is related to system interaction and re-designing aspects of it.

System interaction applies to a variety of contexts such as one’s experience using an ATM machine, a mobile application, a smartwatch, a car etc.

• Conversation design to help driver system interactions (containing two relevant artefacts)

• Industrial design to help driver system interactions (containing one artefact)

System interaction applies to a variety of contexts such as one’s experience using an ATM machine, a mobile application, a smartwatch, a car etc.

• Conversation design to help driver system interactions (containing two relevant artefacts)

• Industrial design to help driver system interactions (containing one artefact)

Who are the users?

Those who are concerned about the lack of safety in using mobile phones while driving, about the lack of safety in fiddling around with dashboard buttons while driving for music, A/C controls etc.

Targeted at the younger audience of 18-45 who are more likely to see value / worth in this?

Resources for identifying painpoints

Upon engaging in some secondary research from following sources, I noted down below painpoints.

1. Vehicle Users Consider Distracted Driving Due To Mobile Phone Among Top Causes Of Accidents: Survey

1. Vehicle Users Consider Distracted Driving Due To Mobile Phone Among Top Causes Of Accidents: Survey

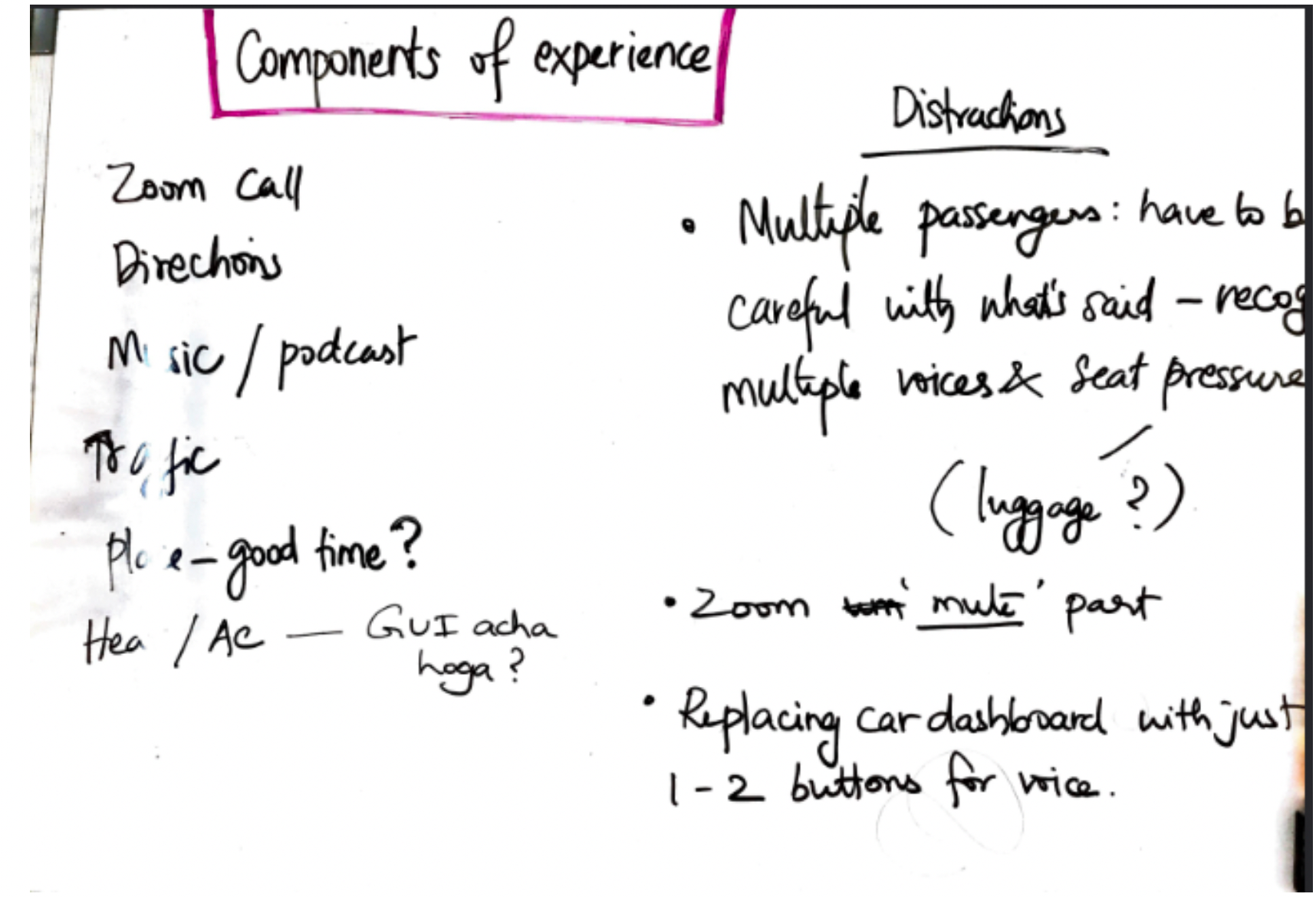

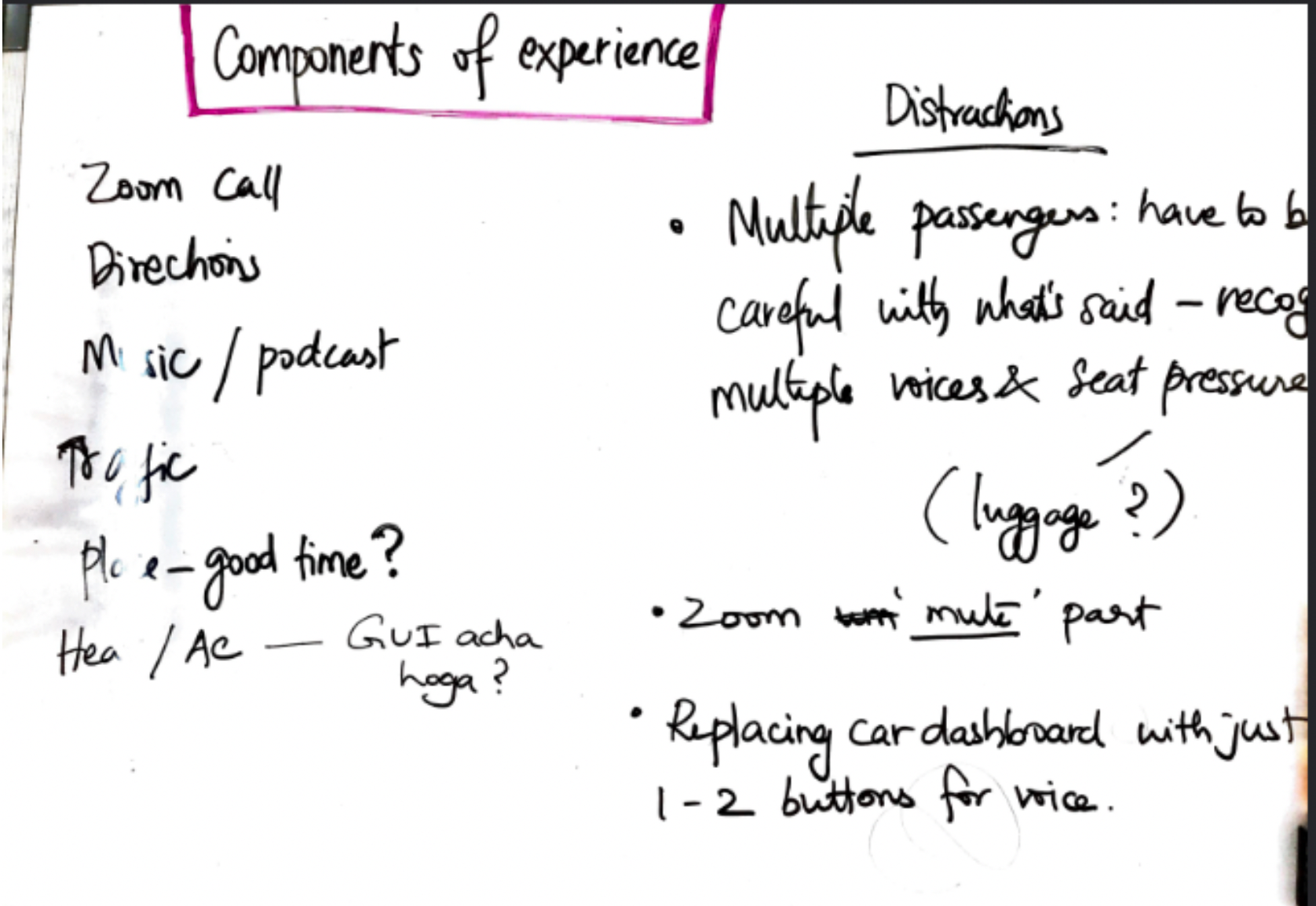

What are the main distractions on the road? (PAINPOINTS)

Feature list for this problem

Ideating around how to solve this problem

I used Google's conversation design framework to evaluate and follow.

.insert notion

Building System person with Ideation, Reflection and Inspiration

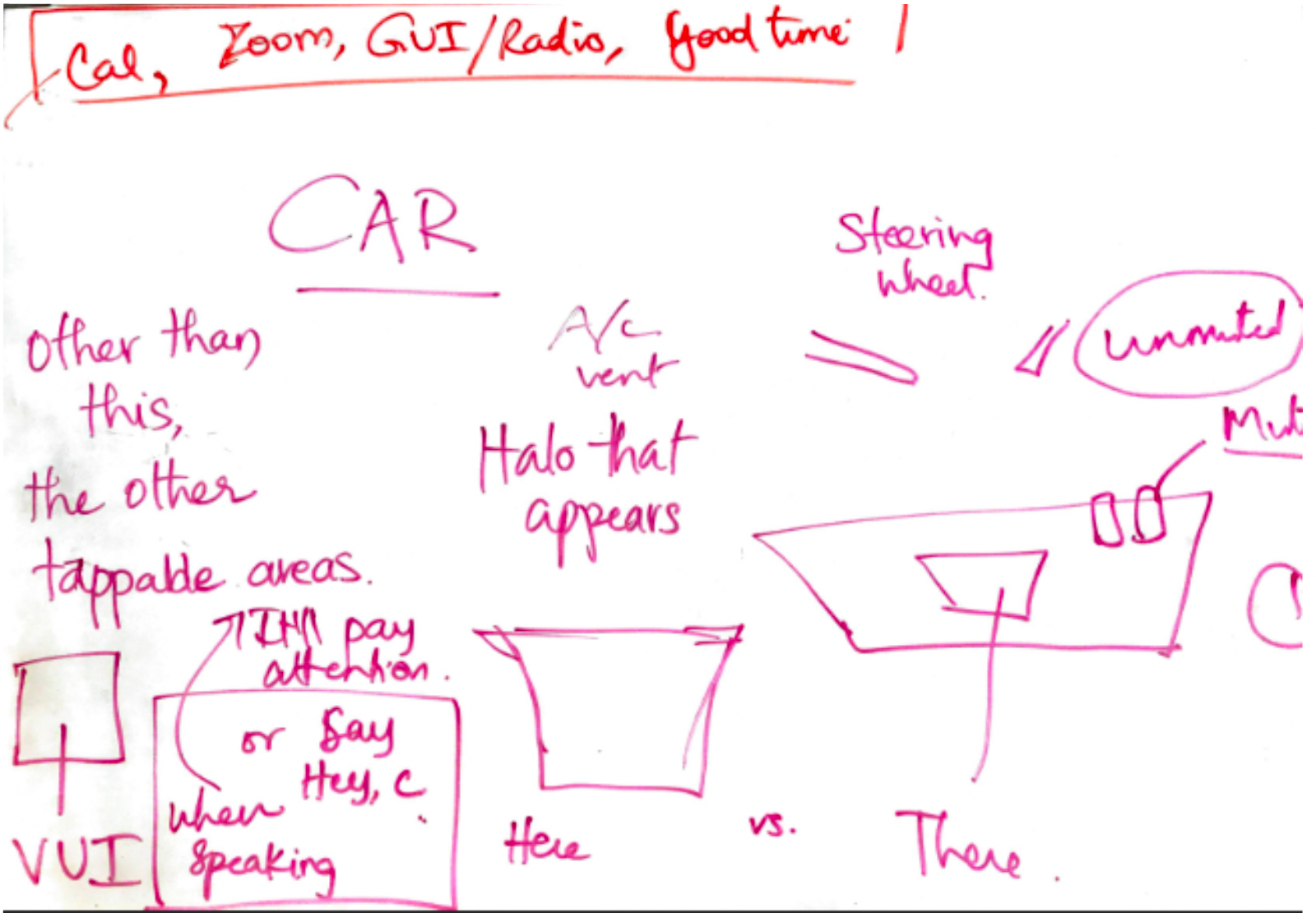

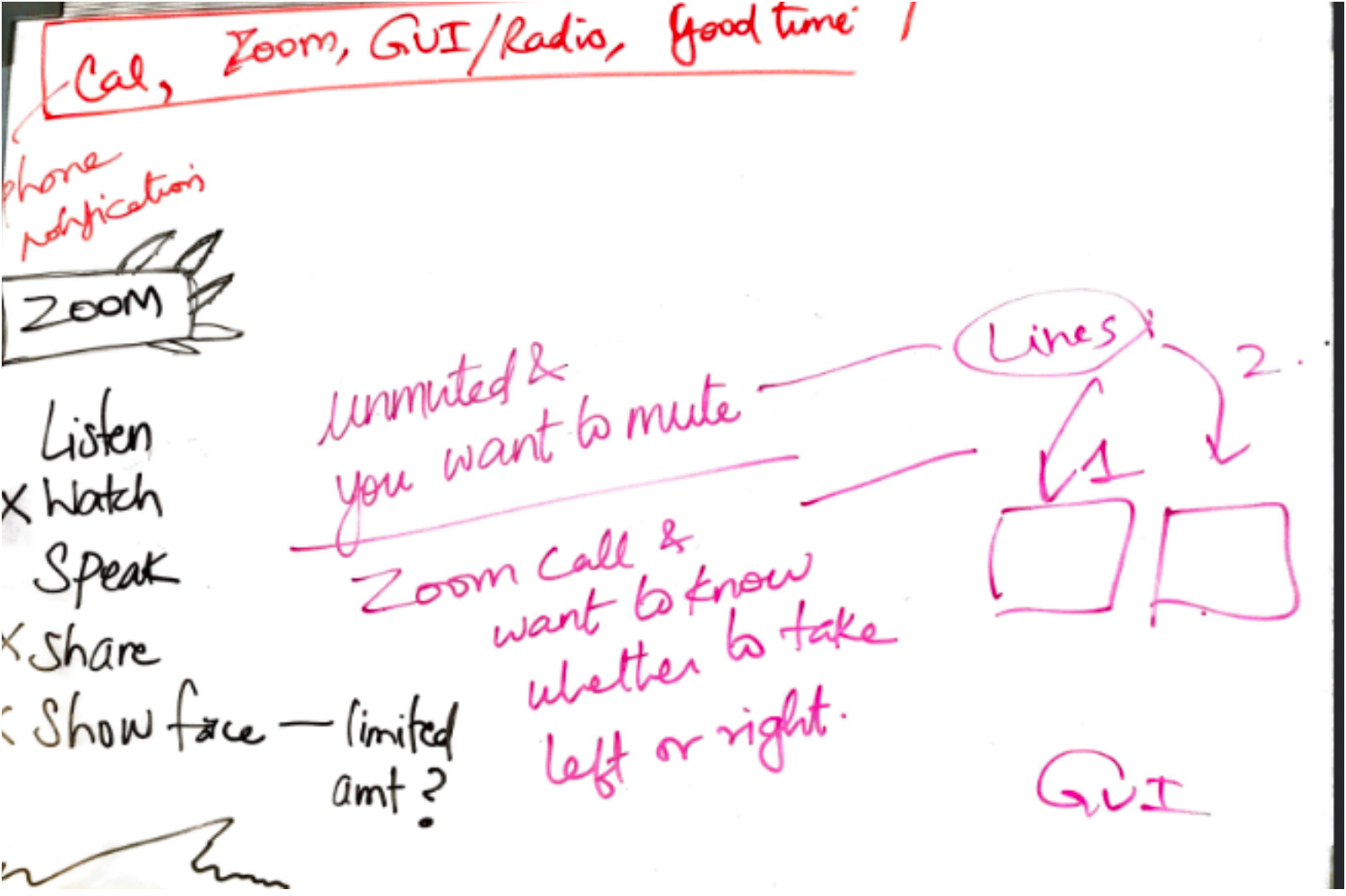

System person for the voice user interface that'll reside inside the car

Ideating on the user journey we are designing for (Early stage user mapping)

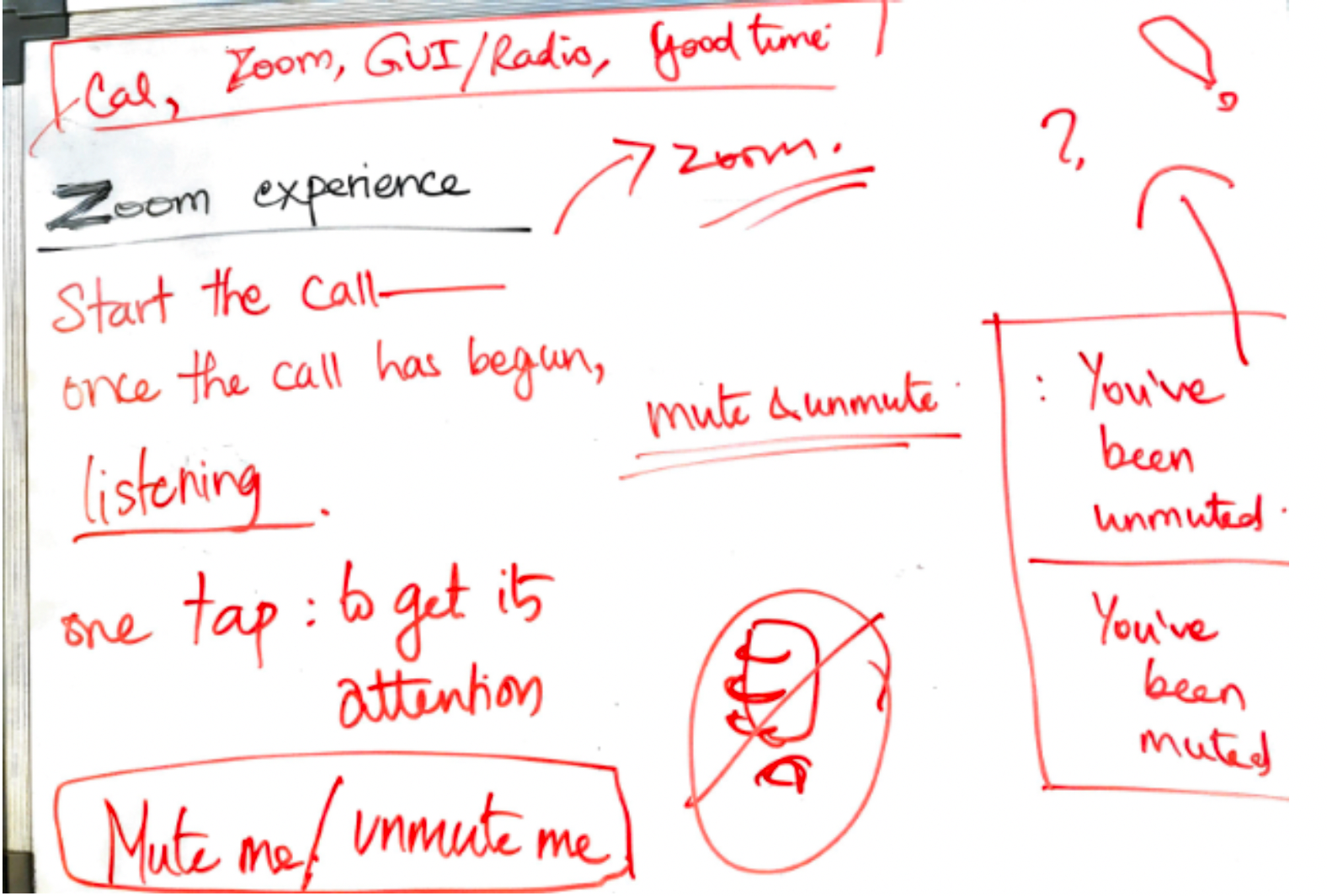

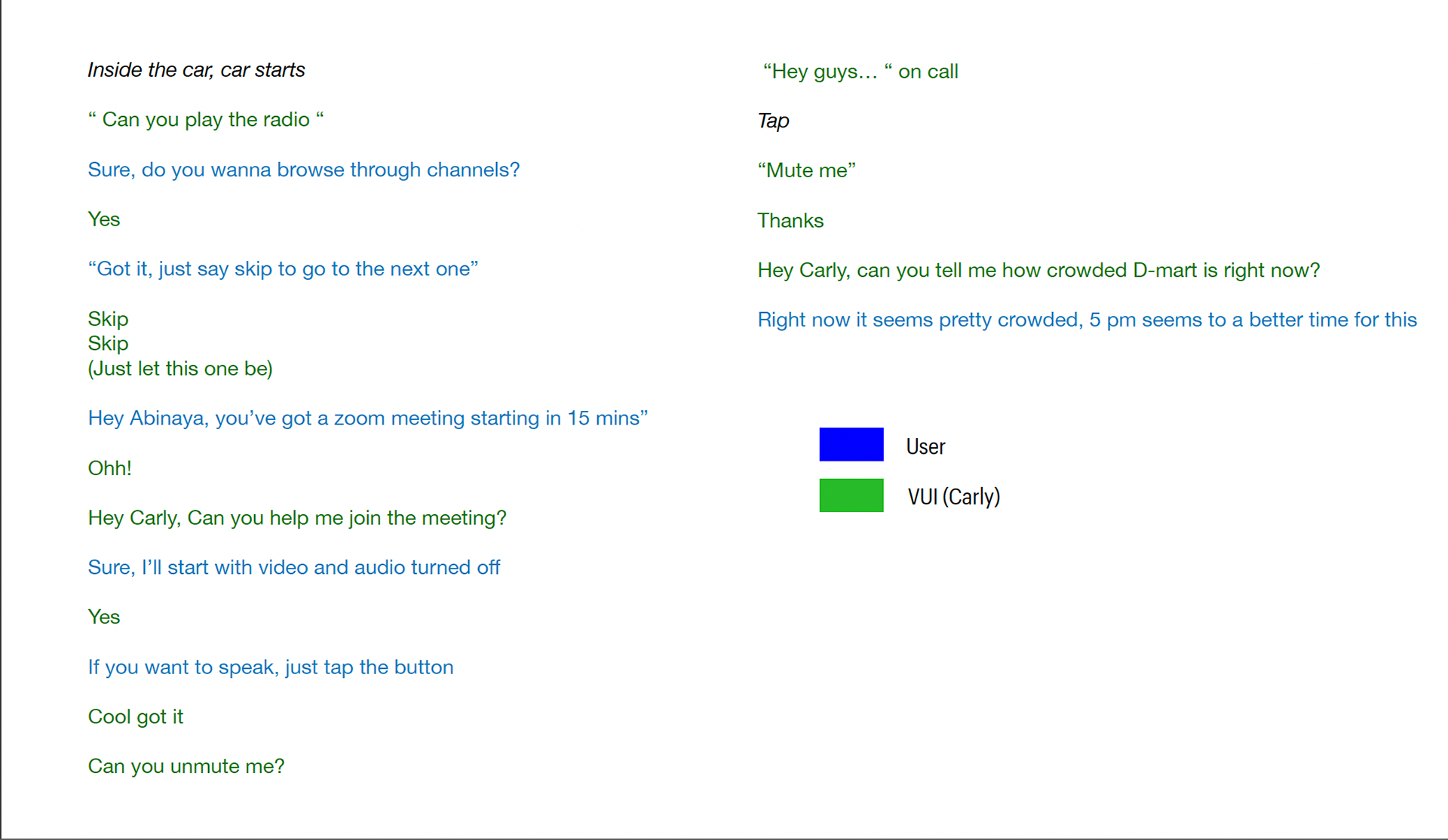

Creating dialogues for the VUI

Iterated Dialogues for VUI in Cars

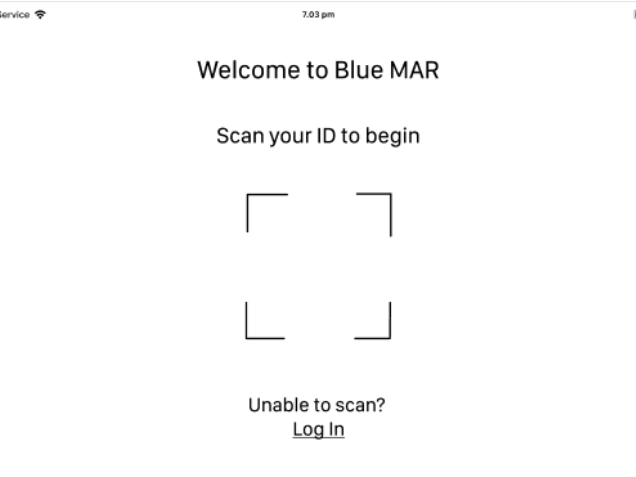

How is the VUI triggered?

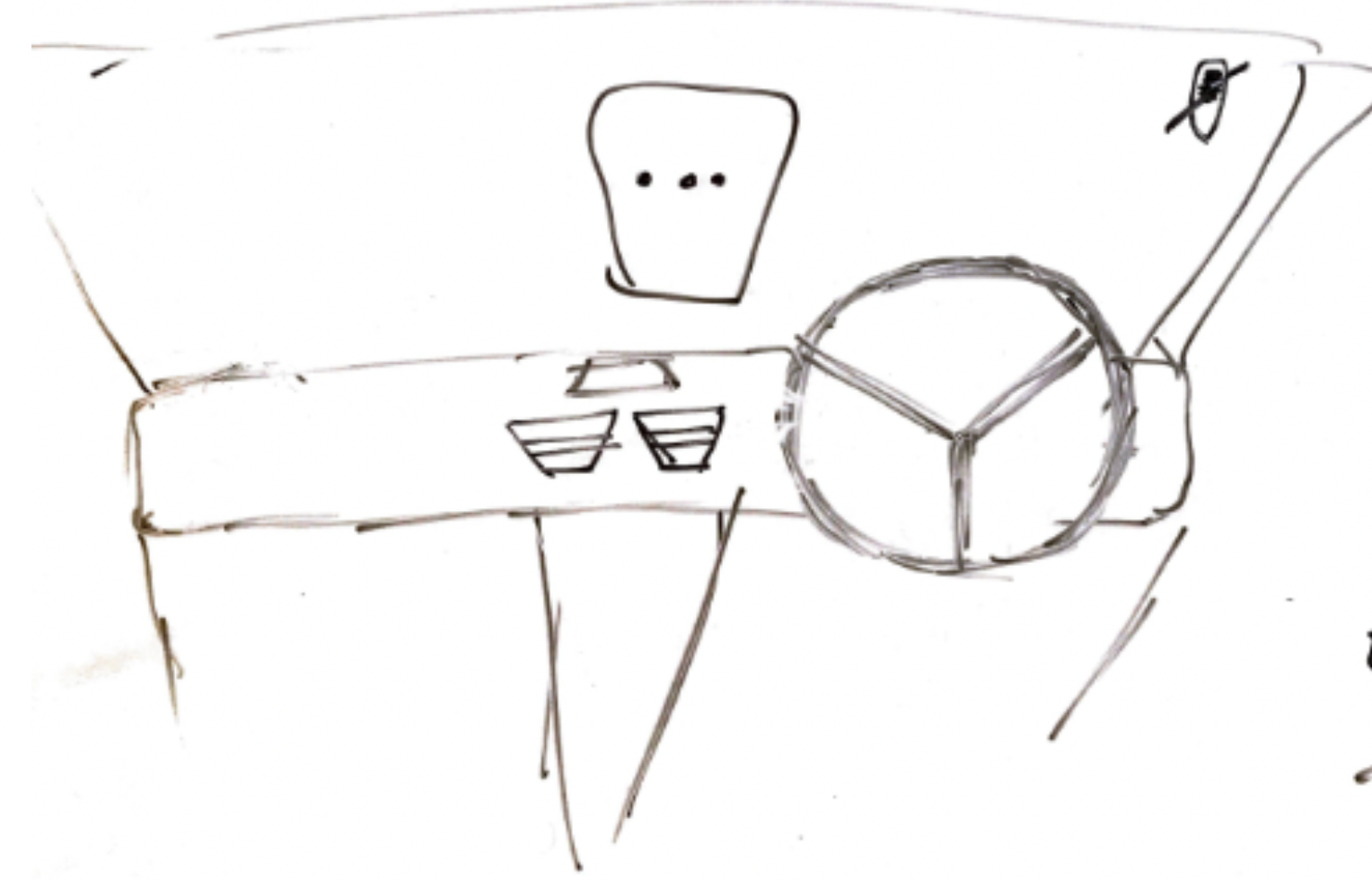

Apart from voice, considering how noisy it could be on the streets - there have to be other ways that one can trigger VUI.

A small button would be going back to the current problem. Hence a bigger button that is easy to locate with peripheral vision also (Thus not requiring specific focus)

A small button would be going back to the current problem. Hence a bigger button that is easy to locate with peripheral vision also (Thus not requiring specific focus)

How I went on to test the application

Wizard of Oz Prototyping

1. My friend and I made use of the above experience map to act it out

2. I had a make-do industrial design output as part of the dashboard to trigger the VUI (or with 'Hey Carly')

2. I had a make-do industrial design output as part of the dashboard to trigger the VUI (or with 'Hey Carly')

3. Due to time constraint, I wasn't able to conduct further tests - but this is definitely something to do in the future.

Insights from testing

1. I realized I needed to add unhappy scenarios also.

2. Test with 5-6 people and interview with them, if it'll be more distracting? Also see if different design solutions could be a better fit

3. This was just the beginning with testing one flow, it needed other flows and clear definition what can and what cant be done

4. We also needed to identify triggers

5. Test heads-up displays to see if its distracting

Looking forward

" I learnt from a mentor of mine that making something semi-autonomous is far more difficult that making something fully autonomous. Since in semi-autonomous there is still some level of user input and it needs to be heard at the right time! How do we do this? "

Behind the Scenes on how I reached here

Find below sketches, ideation and work-in-progress documentation